How to Scrape Amazon: Best Practices & Tools

Example: Scraping Amazon in Python

A Step-by-Step FastAPI Scraping Tutorial

Is eCommerce Web Scraping Legal?

Yes, in general, scraping publicly available eCommerce data is legal. However, it’s important to scrape responsibly by respecting rate limits set by websites and avoiding copyrighted content. Always check the terms of service of the website you’re scraping to stay compliant.

Scraping Data from Behind a Login

Many people ask: Is it legal to scrape data from behind a login?

While it’s technically possible to automate logins using Playwright, Selenium, or ScrapingRocket’s JavaScript scenario, scraping data from logged-in users comes with legal risks.

⚠️ Scraping personal or sensitive information is not legal and may violate privacy laws like GDPR or CCPA.

Why Scrape Amazon?

Amazon provides a massive amount of valuable data that can be used for both personal and business applications, such as:

-

AI Training & Recommender Systems

- Scraping product reviews or pricing history to train AI models for price predictions or product recommendations.

-

Cost Comparison & Price Alerts

- Many users and businesses don’t have time to manually track prices daily. Scraping allows for automated price monitoring using scripts or the ScrapingRocket API.

-

Customer Preferences & Trend Analysis

- Scraping product data helps businesses understand customer behavior, track market trends, and make data-driven decisions.

Why Use ScrapingRocket?

ScrapingRocket makes web scraping easier by removing the need for manual coding.

- AI-Powered Selector – Extract any data in under a minute by generating a config parser that you can modify as needed.

- AI-Generated Code – No need to waste time inspecting elements or tweaking selectors—ScrapingRocket writes the code for you!

🚀 With ScrapingRocket, you can automate and monetize eCommerce scraping faster than ever!

Comparing Traditional Scraping vs. ScrapingRocket AI Selector

Old Scraping Approach

-

Preparing a Production-Ready FastAPI Environment for Web Scraping

- Setting up FastAPI with Poetry

- Installing Playwright & httpx

- Creating an async Amazon scraper

- Why we chose FastAPI, async, Selectolax, and Playwright

- Writing unit tests

- Deploying to production

-

Why These Technologies?

- Why Poetry for Package Management?

- Dependency Management – Handles Python package dependencies cleanly.

- Virtual Environments – Creates isolated environments without using

venv. - Faster Installation – Resolves dependencies faster than

pip. - Easier Deployment – Generates a

pyproject.tomlfile for simpler setup.

- Why Poetry for Package Management?

-

📌 Installation:

pip install --user poetry

-

🔹 Why FastAPI for API Development?

- Asynchronous – Built for speed and efficiency.

- Lightweight & Fast – Faster than Flask due to Starlette & Pydantic.

- Automatic Docs – Generates Swagger and OpenAPI documentation automatically.

- Production-Ready – Can be deployed with Uvicorn or Gunicorn.

-

📌 Install FastAPI & Uvicorn

poetry add fastapi uvicorn -

📌 Why Async for Web Scraping?

- Speed: Handles multiple requests at once.

- Efficient I/O: Avoids idle CPU time.

- Scalability: Handles more requests per second.

-

📌 Why Selectolax Over BeautifulSoup?

| Feature | Selectolax 🚀 | BeautifulSoup ❌ |

|---|---|---|

| Speed | 5-30x faster | Slower |

| Memory Usage | Low | Higher |

| HTML Parsing | Uses libxml2 | Slower default parsers |

| CSS Selector Support | ✅ tree.css(SELECTOR) | ✅ soup.select(SELECTOR) |

| Handles Broken HTML | ✅ Better | ❌ Less effective |

poetry add selectolax

- 📌 Why Playwright Instead of Selenium?

| Feature | Playwright 🚀 | Selenium ❌ |

|---|---|---|

| Speed | Faster | Slower |

| Headless Mode | ✅ Supported | ✅ Supported |

| Auto-Waiting | ✅ No manual waits | ❌ Needs sleep() |

| Browser Control | ✅ Chrome, Firefox, WebKit | ✅ Chrome, Firefox |

| Async Support | ✅ Native | ❌ Not native |

poetry add playwright

playwright install

playwright install-deps

-

📌 1️⃣ Set Up FastAPI with Poetry

- 📌 Create a new FastAPI project

poetry new amazon_scraper cd amazon_scraper- 📌 Install dependencies

poetry add fastapi uvicorn httpx playwright selectolax -

📌 2️⃣ Create the Amazon Scraper

- 📂 Folder Structure

amazon_scraper/

│── app/

│ ├── main.py

│ ├── scraper.py

│ ├── tests/

│ │ ├── test_scraper.py

│ ├── utils.py- 📌

main.py(FastAPI API Endpoint)

from fastapi import FastAPI

from app.scraper import scrape_amazon

app = FastAPI()

@app.get("/scrape")

async def scrape(url: str, render_js: bool = False):

return await scrape_amazon(url, render_js)- 📌

scraper.py(Web Scraper Code)

import httpx

from playwright.async_api import async_playwright

from selectolax.parser import HTMLParser

TITLE_SELECTOR = "a.a-link-normal[class*='s-line-clamp-'].s-link-style h2.a-size-medium.a-spacing-none.a-color-base span"

async def fetch_with_httpx(url: str):

headers = {"User-Agent": "Mozilla/5.0"}

async with httpx.AsyncClient() as client:

response = await client.get(url, headers=headers)

return response.text if response.status_code == 200 else None

async def fetch_with_playwright(url: str):

async with async_playwright() as p:

browser = await p.chromium.launch(headless=True)

page = await browser.new_page()

await page.goto(url, timeout=60000)

content = await page.content()

await browser.close()

return content

async def scrape_amazon(url: str, render_js: bool):

html = await fetch_with_playwright(url) if render_js else await fetch_with_httpx(url)

if not html:

return {"error": "Failed to fetch the page"}

tree = HTMLParser(html)

titles = [node.text() for node in tree.css(TITLE_SELECTOR)]

return {"titles": titles} -

📌 3️⃣ Run the FastAPI Server

uvicorn app.main:app --reload

📌 Test the API

http://127.0.0.1:8000/scrape?url=https://www.amazon.com/s?k=PlayStation+DualSense&render_js=false

- 📌 4️⃣ Write Unit Tests

📂 tests/test_scraper.py

import pytest

from app.scraper import scrape_amazon

@pytest.mark.asyncio

async def test_scraper():

url = "https://www.amazon.com/s?k=laptop"

result = await scrape_amazon(url, render_js=False)

assert isinstance(result, dict)

assert "titles" in result

📌 Run tests

pytest

-

5️⃣ Deploying to Production

- 1️⃣ Install Gunicorn

poetry add gunicorn- 2️⃣ Run Production Server

gunicorn -k uvicorn.workers.UvicornWorker app.main:app

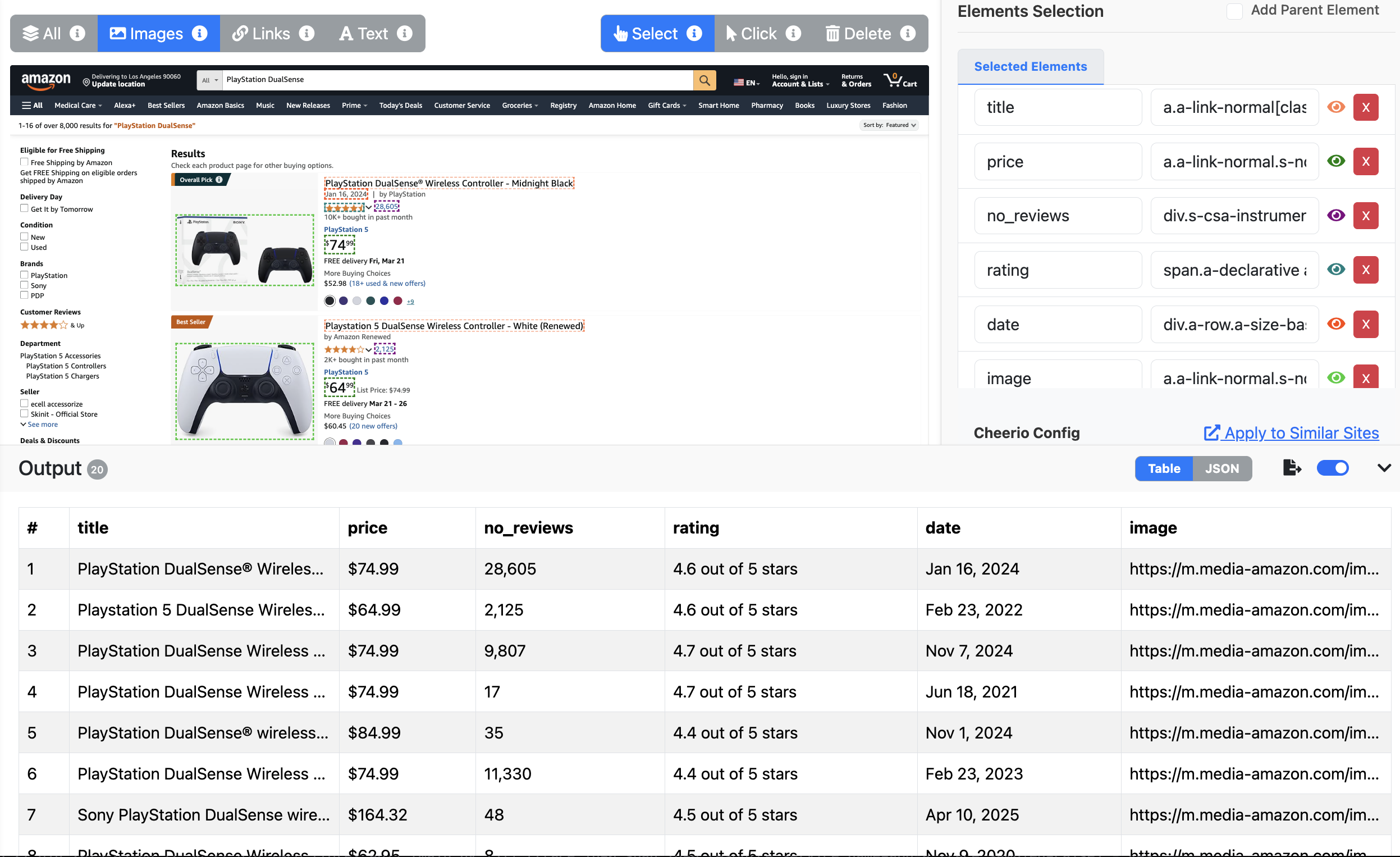

ScrapingRocket AI Approach

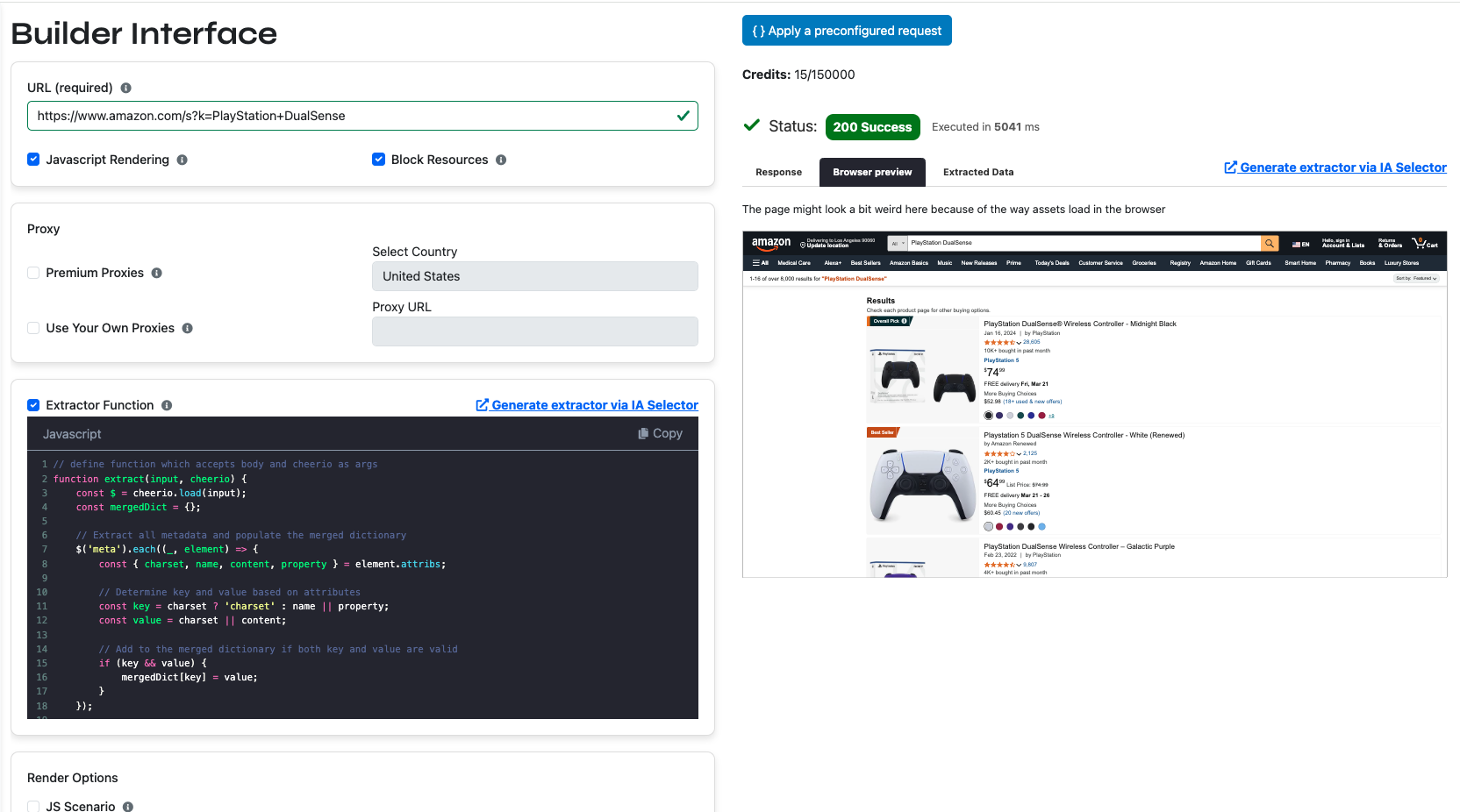

Step 1: Set Up the Request

- Enter the URL into the request builder.

- Choose whether to enable JavaScript rendering.

- Customize proxies—either use your own or premium ones.

- Integrate your Cheerio configuration parser.

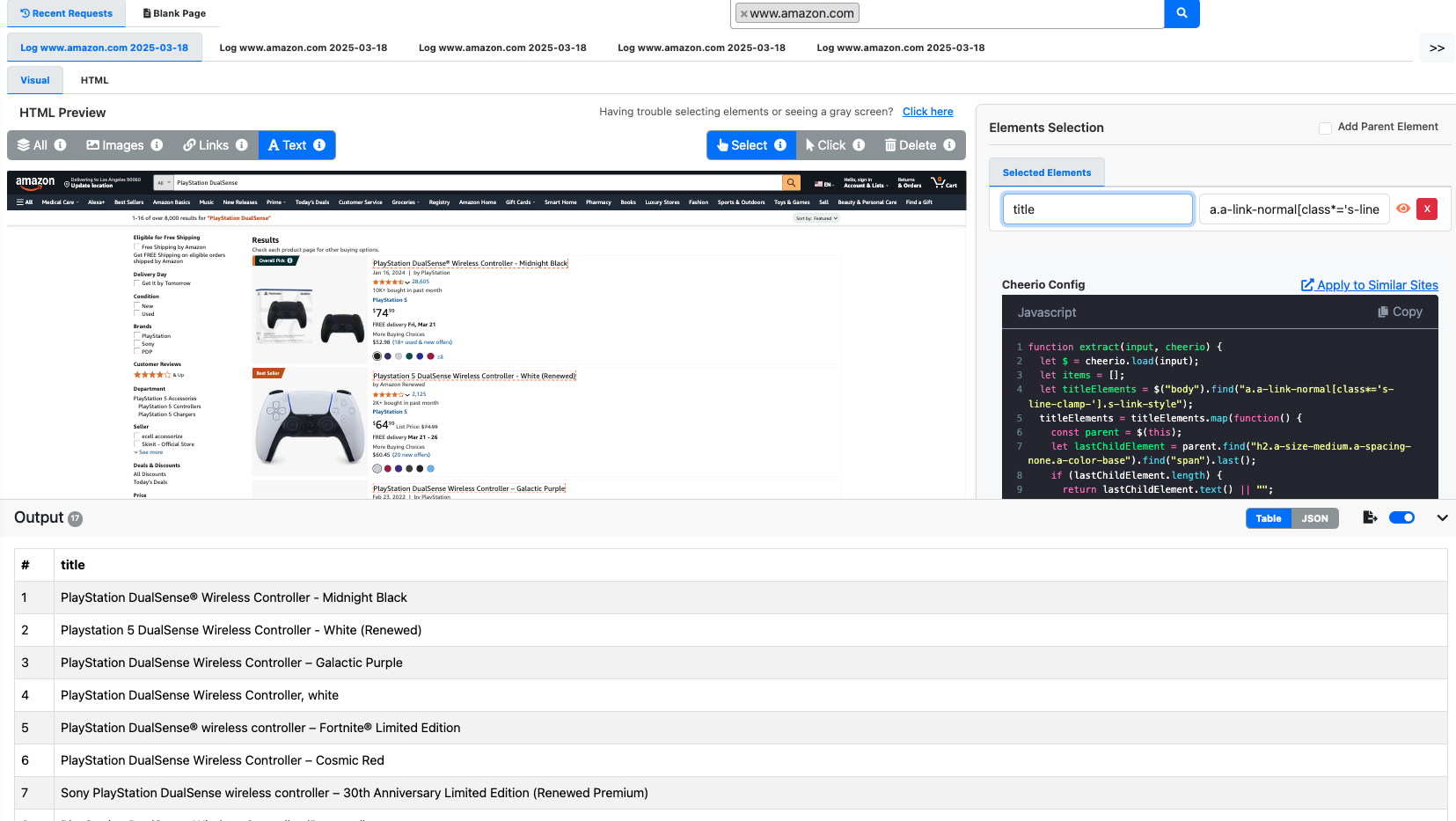

Step 2: No Need for Manual Inspection

- No more wasting time inspecting elements.

- With one click, ScrapingRocket extracts all necessary details.

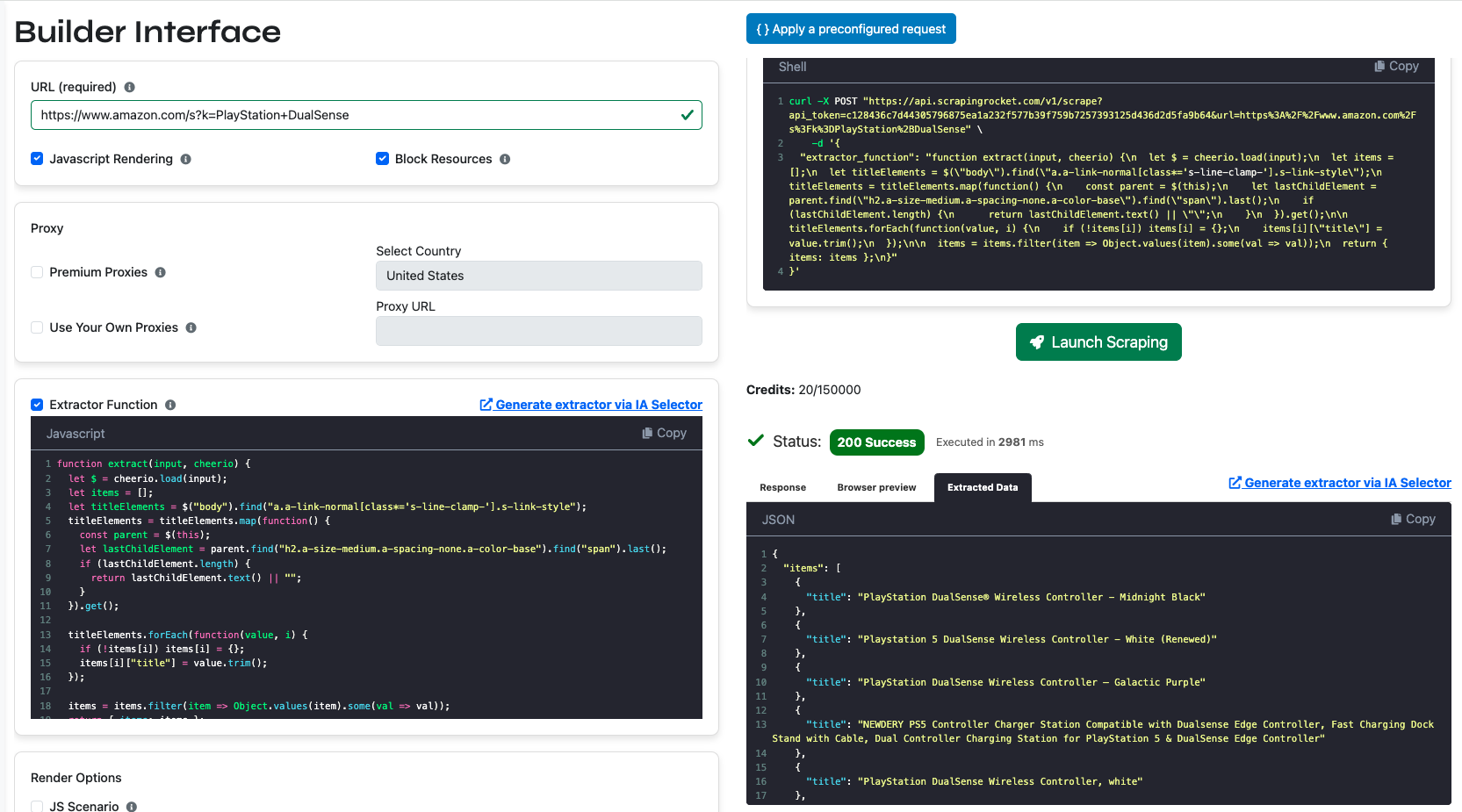

Step 3: Get the Extracted Data Instantly

- Once you send the request via the API, you receive structured data immediately.

🚀 With ScrapingRocket, you can build and deploy a scraper in just a few clicks—without writing code!

Challenges to Consider When Scraping Amazon for a Robust Solution

-

Rate Limiting – Many eCommerce websites impose rate limits on access to their data. If you send too many requests too quickly from a single source, they’ll slow you down or block you entirely for automated access.

-

CAPTCHAs – Are you ready to deal with CAPTCHAs? Websites use these tests, including Google reCAPTCHA v2 or the even harder reCAPTCHA v3, to make bypassing them time-consuming and complex.

-

IP Blocking – Many websites detect IPs associated with web scraping or automation. They can easily identify tools like Selenium or even basic requests and block your IP.

-

Dynamic Content – Some websites require JavaScript to load their content, especially modern React, Next.js, or backend-driven sites, where data is fetched dynamically and then rendered on the frontend.

-

Frequently Changing Classes or Elements – Some eCommerce websites update their HTML structure regularly, meaning you have to constantly tweak your CSS or XPath selectors to keep up.

-

Headers Analysis – Some websites analyze request headers to detect automated access. To bypass this, you need to rotate headers and mimic real browser behavior.

DeepSeek vs. ChatGPT vs. ScrapingRocket: Which Scraping Solution is Better?

When it comes to extracting data from Amazon, different tools handle the task in different ways. We compared ChatGPT, DeepSeek, and ScrapingRocket by providing them with an Amazon product page's raw HTML and asking them to extract all product titles.

Challenges in Using AI for Scraping

-

Processing Time – AI models like ChatGPT and DeepSeek are not optimized for real-time web scraping. They process large HTML inputs slowly compared to dedicated scraping solutions.

-

Accuracy – AI models can struggle with inconsistent or dynamic HTML structures. If a website changes its layout frequently, AI-based parsing requires constant adjustments.

-

Handling Large HTML Inputs – Amazon pages are massive, filled with product listings, ads, and scripts. AI models often struggle to efficiently parse such large inputs, leading to slow responses or incomplete data extraction.

-

Final Verdict While ChatGPT and DeepSeek can assist with structuring scraping logic, they are not built for high-performance scraping.

✅ ScrapingRocket, on the other hand, is designed for speed, accuracy, and adaptability, making it the more reliable solution for web scraping at scale. 🚀

ScrapingBee vs. ScrapingRocket: Which Scraping Solution is Better?

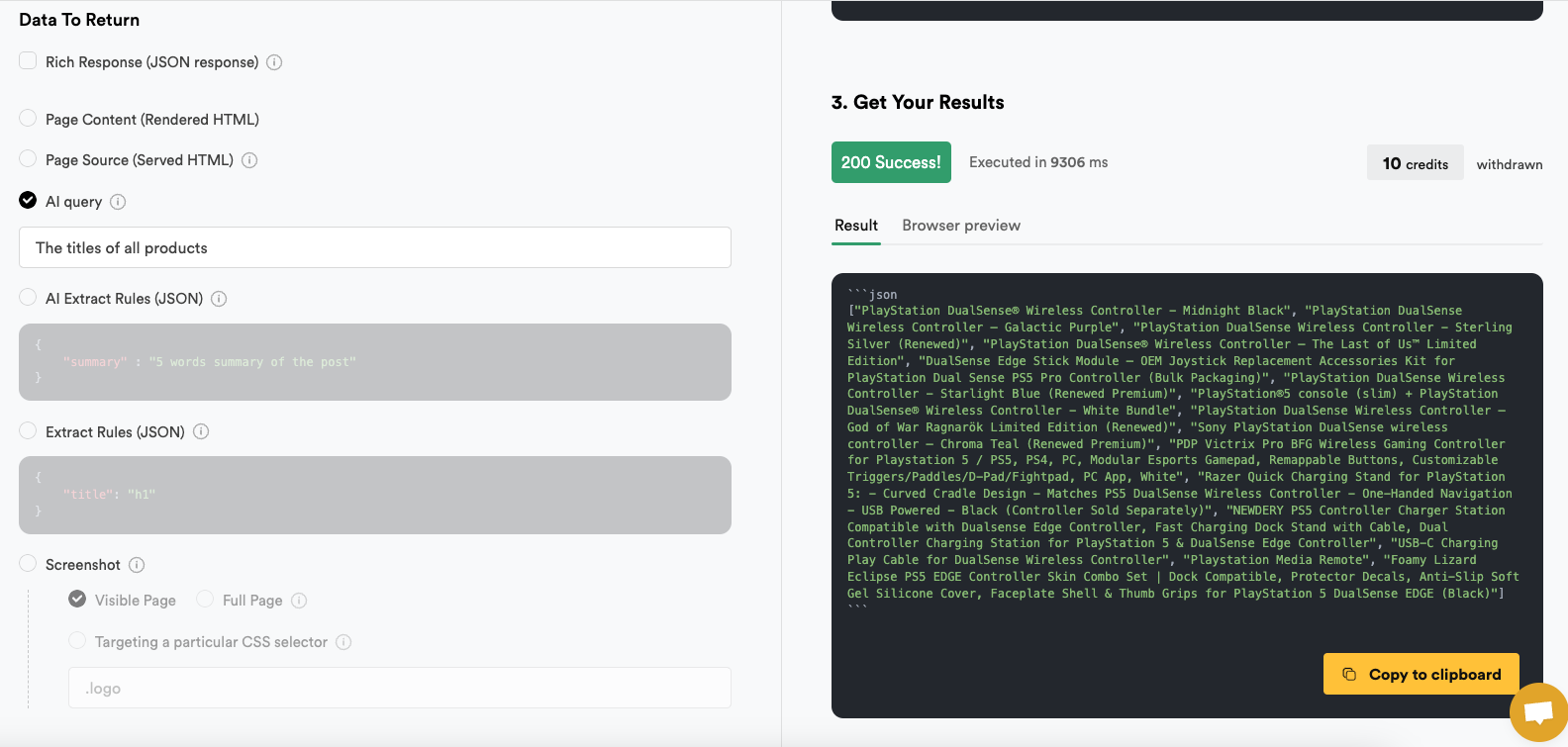

ScrapingBee recently launched their AI Query feature, allowing users to parse details by providing a query prompt. However, keep in mind that:

- ScrapingBee's AI Query is limited compared to ScrapingRocket.

- Every time you want to fetch new data, you must send a new query, and each query costs an additional 5 credits.

- ScrapingRocket offers more flexibility—it’s free to use and fully customizable.

ScrapingBee: AI Query Example

ScrapingRocket: Fully Customizable Parameters

With ScrapingRocket, you can simply add a new pricing parameter, rename it, or customize it without any additional cost.

- Modify pricing parameters dynamically.

- Rename fields as needed.

- Add as many parameters as you want without extra charges.

🚀 ScrapingRocket gives you more control, unlimited customization, and cost-free adjustments!

Conclusion

And this is it! Today, we talked about scraping Amazon shopping results. Together, we learned how to fetch Amazon product details. We wrote Python scripts to achieve this task using the "classic" approach with CSS selectors and also by utilizing ScrapingBee's AI.

By customizing the code, you can further adapt the scraper to suit your needs.

I hope you found this tutorial helpful. As always, thank you for staying with me—happy scraping! 🚀

Best Practices

Scraping eCommerce websites has become increasingly challenging over the years, as companies continuously improve their anti-scraping mechanisms. To overcome these challenges and bypass CAPTCHAs, we must follow best scraping practices, such as:

-

Rotate proxies & User-Agent headers to avoid being blocked, as websites can easily detect multiple requests from the same IP and ban it.

-

If using Python, always add headers and rotate them. You can use the

user-agentlibrary. -

Bypassing Cloudflare and Other Protections

Many websites use Cloudflare and advanced anti-bot systems like DataDome to block automated scrapers. To bypass these, we can use third-party services such as anti-CAPTCHA solutions to solve CAPTCHA challenges and inject dynamically generated cookies for authentication.

📌 Before You Proceed:

- Ensure you have anticaptchaofficial version 1.0.46 or higher:

pip install 'anticaptchaofficial>=1.0.46'- Example Code for Bypassing DataDome with AntiCaptcha

from anticaptchaofficial.antibotcookie import antibotcookieTask

import requests

# Proxy configuration (Use a dedicated private proxy)

proxy_host = "YOUR_PROXY_IP"

proxy_port = YOUR_PROXY_PORT

proxy_login = "YOUR_PROXY_LOGIN"

proxy_pass = "YOUR_PROXY_PASSWORD"

# API Key from Anti-Captcha

API_KEY = "YOUR_ANTI_CAPTCHA_API_KEY"

# Initialize the solver

solver = antibotcookieTask()

solver.set_key(API_KEY)

solver.set_website_url("https://target-website.com/protected-page")

solver.set_proxy_address(proxy_host)

solver.set_proxy_port(proxy_port)

solver.set_proxy_login(proxy_login)

solver.set_proxy_password(proxy_pass)

# Solve and get authentication data

result = solver.solve_and_return_solution()

if not result:

print("Failed to retrieve cookies. Try again.")

exit()

# Extract cookies and headers for requests

cookies, fingerprint = result["cookies"], result["fingerprint"]

cookie_string = '; '.join([f"{key}={value}" for key, value in cookies.items()])

user_agent = fingerprint["self.navigator.userAgent"]

print(f"Use these cookies: {cookie_string}")

print(f"Use this user-agent: {user_agent}")

# Make the actual request with solved cookies

session = requests.Session()

session.proxies = {

"http": f"http://{proxy_login}:{proxy_pass}@{proxy_host}:{proxy_port}",

"https": f"http://{proxy_login}:{proxy_pass}@{proxy_host}:{proxy_port}"

}

headers = {

"User-Agent": user_agent,

"Cookie": cookie_string

}

response = session.get("https://target-website.com/protected-page", headers=headers)

print(response.text) # HTML content of the page-

Need More Details?

This method works by resolving Cloudflare/DataDome challenges and injecting valid authentication tokens dynamically.

If you want an in-depth tutorial on bypassing DataDome and Cloudflare with anti-captcha services, check out this guide:

🔗 Tutorial on Bypassing Cloudflare Protections

🚀 With this setup, you can bypass bot protections and scrape websites successfully!

-

Parsing Data: Tools & Best Practices Once you've bypassed Cloudflare, DataDome, or other protections, the next challenge is parsing the scraped data efficiently. Choosing the right tool depends on your tech stack and performance needs.

- 🔹 Python: BeautifulSoup & Selectolax

- BeautifulSoup – Easy to use but slower for large-scale parsing.

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, "html.parser")

titles = [tag.text for tag in soup.select("h2.title")]- Selectolax – Faster, optimizeds for large HTML files..

from selectolax.parser import HTMLParser

tree = HTMLParser(html)

titles = [node.text() for node in tree.css("h2.title")]- 🔹 JavaScript: Cheerio For Node.js users, Cheerio is the best lightweight HTML parser:

const cheerio = require('cheerio');

const $ = cheerio.load(html);

const titles = $("h2.title").map((_, el) => $(el).text()).get();

console.log(titles);-

Final Thoughts

- For simple HTML parsing: Use BeautifulSoup or Cheerio.

- For large-scale scraping: Use Selectolax (Python) or Cheerio (Node.js).

- For JavaScript-heavy pages: Use Playwright or Puppeteer.

🚀 Choosing the right tool ensures fast, accurate data extraction!

- 🔹 Python: BeautifulSoup & Selectolax